Table of content

- Overview of How Googlebot Works

- How Googlebot crawls, renders, and indexes pages

- Vital technical elements for Googlebot optimization

- Creating compelling content for users and Googlebot

- Tracking and analyzing Googlebot activity

- Stay on Top of Google Algorithm Updates

- Final thoughts

Googlebot is the automated search bot that crawls the internet and helps index websites for Google Search. Understanding how Googlebot discovers, analyzes, and ranks web pages is essential for anyone who wants their site to perform well in search results. This article will provide an in-depth look at how Googlebot works and actionable tips you can implement to optimize your site. The goal is to improve your website’s indexing and ranking potential in 2023.

1. Overview of How Googlebot Works

Googlebot starts by crawling the vast internet network to discover new and updated content. It identifies links on web pages and follows them to find additional pages to crawl. As Googlebot crawls a site, it fetches and renders the HTML of each page. This allows it to process the entire content and structure of the page. It extracts critical information from the page content and URL, analyzes the words and phrases on the page, and evaluates things like page speed and mobile-friendliness. All of this data gets aggregated, and Googlebot ultimately indexes the page and uses signals like relevance, authority, and quality to determine search rankings.

For example, when it crawls your home page, it parses elements like the page title, meta descriptions, headings, and body content. It looks at your internal links, site architecture, and URL structure. It pays attention to page speed, semantic HTML markup, and how well the page displays on mobile. All of this factors into decisions about indexing and ranking pages in search results. Understanding what Googlebot prioritizes can help you strategically optimize different elements on your site.

It is key to create compelling, valuable content that caters to its technical crawling, indexing, and ranking requirements. Let’s explore some specific optimization tips you can implement.

2. How Googlebot Crawls, Renders, and Indexes Pages

Googlebot starts the process by crawling billions of web pages across the internet to discover new or updated content. The Googlebot crawler follows links between websites to index new pages. As the crawler reaches each page on your site, it fetches and renders the HTML code to process the entire content and structure of the page.

Fetching and Rendering Pages

When Googlebot fetches a page, it pulls the HTML code and assets like images from your web server. It then renders the page, evaluating elements like text, titles, headings, links, and media. This rendering process allows Googlebot to interpret the page like a browser and understand the content and markup.

Indexing Pages

After fetching and rendering, Googlebot extracts critical information from the page content and URL. It analyzes words, phrases, and semantics to determine the page’s focus and meaning. It then goes through an indexing process to add the page to Google’s search index, making it discoverable by searchers.

Ranking Pages

It also evaluates many signals like mobile-friendliness, site speed, authoritative links, and user experience to determine search rankings. Pages that satisfy Google’s evolving ranking algorithms get prioritized in search results. Optimizing how Googlebot crawls, indexes, and ranks pages is crucial for search performance.

3. Vital technical elements for Googlebot optimization

Optimize Site Architecture and Internal Linking

Carefully plan your site architecture and internal linking structure to make pages easy for Googlebot to crawl and index. Use a simple, shallow hierarchy with relevant links between related content. This helps Google understand semantic connections and flow through your site.

Clean, Descriptive URLs

Implement clean, descriptive URLs with targeted keywords that are easy for Googlebot to crawl and users to understand. Avoid overly long or complex URL structures.

Logical Site Navigation

Ensure all site pages are connected through a logical navigation menu and sitemap. Well-linked site architecture signals legitimacy.

Prioritize Page Speed

Googlebot favors fast-loading pages. Optimize images, enable compression, and minimize HTTP requests through performance testing and monitoring.

Mobile-Friendly Design

With mobile usage surging, a mobile-friendly responsive site is essential. Test across devices and optimize for the small screen experience.

Semantic HTML Markup

Use proper HTML tags like headers, lists, and emphasis so Googlebot can interpret page content. Optimize title tags, metadata, and image alt text.

Quality Backlinks

Earn backlinks from reputable sites to signal authority and relevance—link intelligently from external sites back to related internal content.

Optimizing these core technical aspects provides Googlebot with what it needs to properly index and rank your pages.

4. Creating compelling content for users and Googlebot

Now that we’ve covered the basics of how Googlebot interacts with your site let’s look at specific optimization tips you can implement.

Here are some tips for creating compelling content for users and Googlebot:

Optimize Content for Target Keywords

Focus your content around primary keywords and related long-tail vital phrases you want to rank for. Include these organically throughout your content.

Create Useful, Informative Content

Provide value to users with in-depth, accurate information and advice that solves problems or satisfies intent. Well-researched, expert-driven content performs well.

Engage with Compelling Content

Capture attention with an engaging writing style. Use storytelling elements and personality. Content written for users first also resonates with Googlebot.

Incorporate Multimedia

Enhance posts with relevant images, graphics, videos, and other media. Optimize with titles, alt text, captions, and transcriptions. Multimedia elements help satisfy search intent.

The goal is to create content that genuinely serves users but also appeals to Googlebot by matching search intent. Provide enough context, expertise, and media richness to keep readers engaged. This earns trust as an authority site worthy of ranking well in search results. Optimize these elements while integrating your target keywords for the best of both worlds.

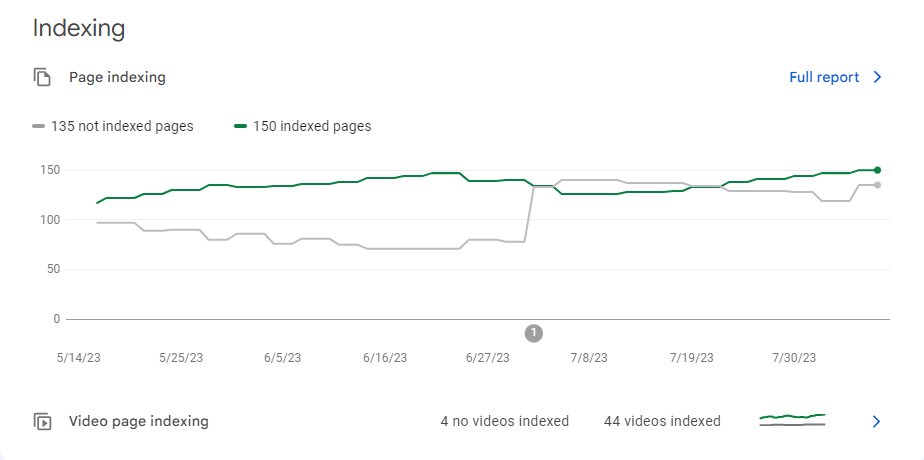

5. Tracking and analyzing Googlebot activity

Here are some tips for tracking and analyzing its activity:

Monitor Google Search Console Data

Google Search Console provides valuable insights into how Googlebot interacts with your site. Analyze crawl stats, indexing and traffic data, manual actions, and optimization opportunities.

Review Google Analytics Traffic

Google Analytics gives you a big-picture view of overall site traffic and performance. Filter for organic traffic to assess Googlebot’s impact on key metrics like sessions, bounce rate, and conversions.

Analyze Log Files

Server and application log files record granular Googlebot behavior like crawled URLs, status codes, and crawl frequency. Leverage log analytics tools for deeper optimization insights.

Getting visibility into how Googlebot crawls and indexes your site is crucial for SEO success. Regularly monitor your Search Console and Analytics data to spot issues early. Log file tools provide technical crawler insights to fine-tune site performance. Analyzing these key sources sheds light on Googlebot activity so you can optimize to improve indexing, ranking, and traffic from Google searches.

6. Stay on Top of Google Algorithm Updates

Google regularly rolls out algorithm updates designed to improve search quality and relevance. Stay on top of the significant updates in 2023 that could impact your site’s performance.

Understand BERT and Neural Matching

Google’s BERT update in late 2019 brought neural net natural language processing to search. BERT analyzes the context and semantic meaning in queries and content. Optimize for BERT with strategic keyword placement and applicable contextual content.

Leverage Passage Indexing

Passage indexing allows Google to return specific sections of long-form content instead of just total pages. Satisfy passage-level intent with focused, in-depth content and structure like headers and formatting.

Google is constantly evolving its ranking algorithms to match intent better and understand language. Significant advances like BERT and passage indexing aim to deliver more relevant results for searchers. Adapt your optimization strategy as these updates roll out. Stay on top of Google algorithm news and adjust your approach to maintain and improve your search rankings.

7. Final thoughts

Understanding Googlebot is essential for excelling in SEO today and in the future. It crawls, renders, and indexes web pages, evaluating many signals to determine search rankings.

Optimize for Googlebot by improving site architecture, speed, mobile friendliness, content, and backlink profiles. Create compelling content focused on target keywords that also satisfy user intent.

Monitor its activity in Search Console, Analytics, and log files. Stay updated on Google’s evolving search algorithms like BERT.

Focus on building high-quality websites that follow best practices for crawl ability, user experience, and search relevance. Provide expertise that searchers find valuable. Satisfying user intent pays off both for visitors and for Googlebot.

As Googlebot gets more innovative, it will become even more important to give it what it wants – great content! Optimizing it improves discoverability and search traffic. Implement these tips to help your website stand out in SERPs now and in the future.

Leave a Reply